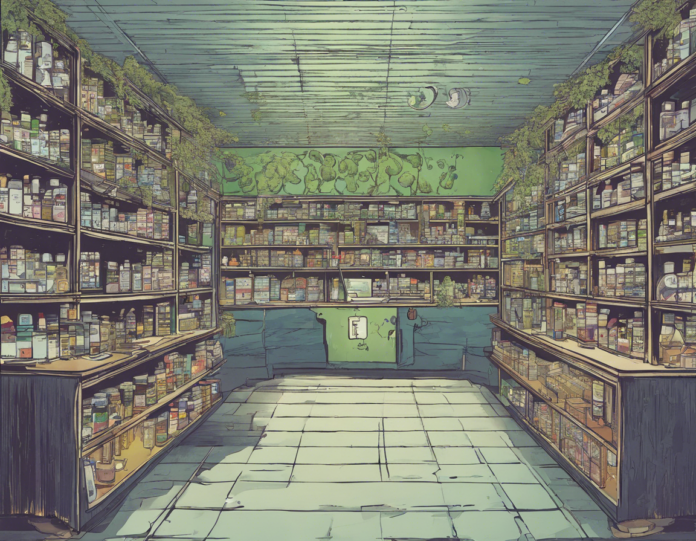

Welcome to the Information Entropy Dispensary, where we delve into the intriguing world of chaos and entropy. In this article, we will unravel the mysteries of chaos theory, information entropy, and how they are interconnected in shaping the world around us.

Understanding Chaos Theory

Chaos theory is a branch of mathematics that studies the behavior of dynamic systems that are highly sensitive to initial conditions, a phenomenon commonly referred to as the butterfly effect. This theory emphasizes the idea that even small changes in initial conditions can lead to vastly different outcomes over time.

Key Concepts in Chaos Theory

-

Non-linear Dynamics: Chaos theory deals with non-linear dynamical systems, which means that the behavior of the system as a whole cannot be simply derived by summing up the behaviors of its individual parts.

-

Attractors: These are the states towards which a dynamic system tends to evolve over time. Strange attractors are those that have a fractal structure and represent the long-term behavior of chaotic systems.

-

Bifurcation: This occurs when a small change in a parameter of a system leads to a sudden qualitative change in its behavior, resulting in the creation of new attractors.

The Role of Information Entropy

Information entropy, a concept from information theory, measures the uncertainty or disorder in a system. In the context of chaos theory, information entropy plays a crucial role in quantifying the unpredictability and complexity of chaotic systems.

Shannon Entropy

Shannon entropy, named after Claude Shannon, is a fundamental concept in information theory that quantifies the average amount of information produced by a random variable. In chaotic systems, Shannon entropy can be used to measure the amount of unpredictability or randomness in the system’s behavior.

Chaos and Entropy in Natural Systems

Weather Patterns

Weather systems are classic examples of chaotic systems characterized by their sensitivity to initial conditions. Small variations in atmospheric conditions can lead to vastly different weather outcomes, making long-term weather prediction inherently uncertain.

Biological Systems

Biological systems, such as the human brain and ecosystems, also exhibit chaotic behavior. The complexity and non-linear dynamics of these systems give rise to emergent phenomena and unpredictable behavior, which can be analyzed using concepts from chaos theory and information entropy.

Applications of Chaos Theory and Information Entropy

Cryptography

In the field of cryptography, chaos theory and information entropy are utilized to create secure encryption algorithms that can withstand attacks from hackers. Chaotic systems are used to generate random keys, and information entropy is employed to measure the security and predictability of cryptographic systems.

Financial Markets

Financial markets are complex systems that exhibit chaotic behavior due to the interactions between various factors such as investor behavior, economic indicators, and market sentiment. Chaos theory and information entropy are employed to analyze market trends, predict financial crises, and optimize investment strategies.

Frequently Asked Questions (FAQs)

- What is the relationship between chaos theory and information entropy?

-

Chaos theory studies the behavior of dynamic systems that are sensitive to initial conditions, while information entropy quantifies the unpredictability and disorder in a system.

-

How is chaos theory applicable in real-world scenarios?

-

Chaos theory is used in weather prediction, biological systems analysis, cryptography, financial markets, and many other fields to study complex systems and phenomena.

-

Can chaos theory help in predicting future events accurately?

-

While chaos theory provides insights into the behavior of dynamic systems, the inherent sensitivity to initial conditions limits the accuracy of long-term predictions.

-

What are some common misconceptions about chaos theory?

-

A common misconception is that chaos implies randomness, whereas chaotic systems exhibit deterministic behavior that appears random due to their complexity.

-

How is information entropy calculated in practical applications?

- Information entropy is calculated based on the probabilities of different outcomes in a system, with higher entropy indicating greater unpredictability and disorder.

In conclusion, chaos theory and information entropy offer valuable insights into the complexity and unpredictability of natural and human-made systems. By understanding the principles of chaos and entropy, we can better navigate the intricate dynamics of our world and harness them for various applications in science, technology, and everyday life.